What is a Site Search for a website?

Anyone who has ever been to a store and found themselves confused about where to find the product they are looking for understands the significance of a well-versed salesman to guide them.

Very similarly, every customer for an online website store is aware of the significance of an internal site search. The site search is a custom-made search engine for a particular website and responds to user queries by accessing the website catalogs.

This feature on a website helps the users to directly type and search the product they need without having to browse the whole website.

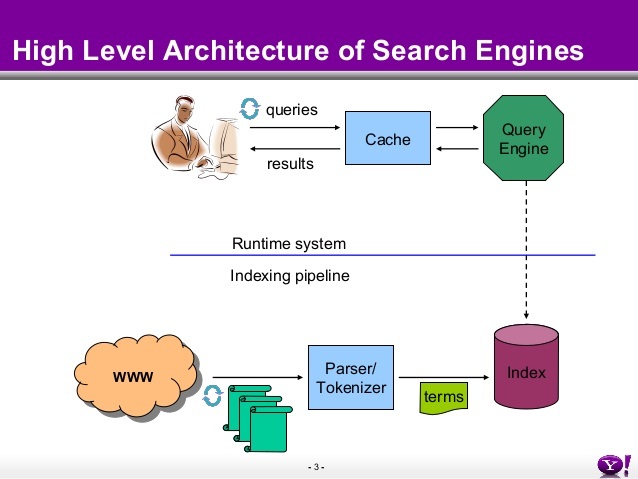

How does search work on a website?

A high level of engineering expertise goes into making a site search effective and relevant.

Let’s understand the steps one by one.

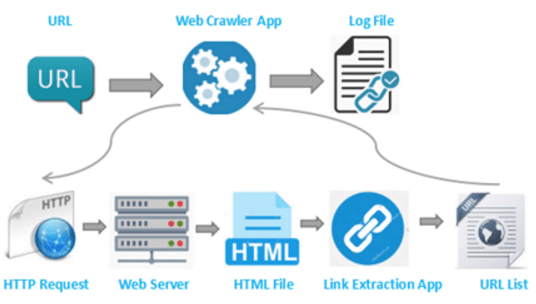

1. Crawler:

It acts as an input tool by connecting the search engine query to new pages. Also, handles large pages while maintaining the significance of the pages since when they were last visited by the crawler as they are subject to change.

The crawler may cater to a single site or many different pages depending on the design.

For enterprise searches, the crawler must be designed to be efficient to access both the internal company document links and external links from personal and corporate directories to account for resources (emails, PDFs, database records, etc.) along with other needed company information.

2. Conversion:

Crawler found documents from a link are not likely plain text or in the format required. They may be in other forms (HTML, PowerPoint, etc.).

This data is converted in the form of consistent text plus metadata through a conversion tool.

For HTML/XML, it is mostly text transformation component.However, for other formats like PDF or Microsoft Office documents, it is the first step before further processing.

Some problems are encountered due to the encoding of the texts in the documents. Most of the world’s languages are coded by Unicode which uses 16 bits to process the documents.

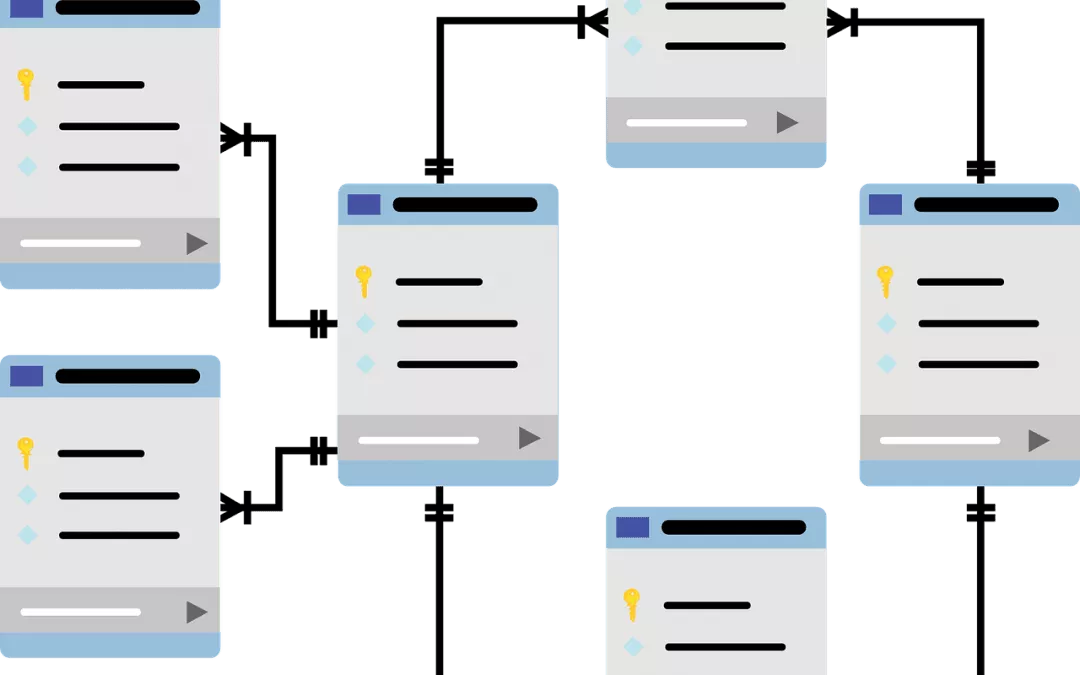

3. Document Data Store:

The document datastore is the huge database on the site managing a large number of company documents and structured data.

It contains document metadata, information taken from various documents, links, and the text associated with the links called ‘Anchor text’.

Mostly, the simpler version is used by most websites to reduce the retrieval times.

4. Text Transformation:

- Parsing- Processes the text tokens in the document while recognizing the structural elements like links, headings, titles, etc. The first step requires tokenizing the document and the query to compare. The tokens are alphanumeric characters with spaces.

- Stop Words- Simple terms posing as index terms, called stop words should be removed.

Examples may be, “of”, “for” “to”. These help in sentence formation but have no contribution to sentence meaning. Even if removed, they do not alter search results.

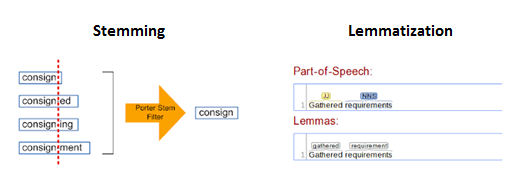

- Stemming- words derived from a common stem are grouped in the process of stemming increasing the probability of the words in documents and queries matching each other. It should be done carefully to avoid search problems.

- Link Extraction and Analysis- this separates the text and stores it in the document data store while parsing to identify the links and the anchor text.

- Information Extraction- is done for the identification of index terms that are more complex than single terms and need special computation tactics. There is special emphasis on semantic content analysis like named entity organizers which can identify specific content (Company name, Person name, etc.)

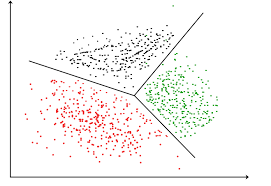

- Classifier- Identifies the class-related metadata in documents or the part of documents. The documents are assigned with pre-decided labels(“business” or “household”). There may be other types of classification including identification of spam and advertising-related content to help rank the data.

5. Index Creation:

- Document Statistics- Carries out the simple task to bring together statistical information about words, features, and documents. The ranking component uses this information to compute the scores for the gathered documents.

All of this is determined by the retrieval model and associate ranking algorithm. These are stored in lookup tables for faster retrieval.

- Weighting- Index words, and their occurrences in the document become very important during ranking. The weighting component uses the statistics in the document to calculate the weight and then stores them in the lookup tables, improving the efficiency of the query process.

- Inversion- It is the core of indexing. It changes the document-term information stream which is derived from the text transformation component into a term document information to create inverted indexes, processing queries faster increasing the efficiency.

- Index Distribution- distributes the indexes created to multiple computers and multiple sites on a network potentially for efficient performance allowing parallel processing of indexing and queries.

6. User Interaction:

- Query input- It provides for interface and parser regarding query language. This can be the addition of quotes as an example which tells the system that the quoted words should occur in a phrase not individually.

- Query Transformation- Improves the initial query using a range of techniques before and after the document is ranked. Tokenizing, stopping, and stemming are the simpler ones while the most advanced ones include spell checking and query suggestions. Helps in suggesting alternatives to the query or adding terms making the query more meaningful.

- Results Output – Displays the ranked content taken from the ranking component.

7. Ranking:

- Scoring- uses the ranking algorithm to score the documents based on the retrieval model.

- Performance Optimization- Helps decide the best ranking algorithm and indexes to decrease the response time and increase the query output.

- Distribution- Ranking can be distributed in the same manner as the index, this is decided by the query broker to allot queries to the specific processors in the network creating the final list of ranks for the query. Caching is an example where indexes and sometimes ranked documents are kept in the local memory for faster processing and retrieval.

8. Evaluation:

- Logging- Tracking all the user queries is beneficial to keep a track of query suggestions, spell checks, and several other tasks to improve search effectiveness in the long run.

- Ranking analysis- Evaluation measures are used to measure the outcomes that are useful for the application. Many search queries require quality from the top-ranked documents, not the whole list.

- Performance Analysis- Response time and throughput are some of the measures used in calculating the performance, can also be tested using simulations that replace human input with mathematical models.

Why does my website need a Search bar?

A highly efficient website converts visitors into customers. Spending a lot of time searching for their product, users lose interest.

When there are hundreds of pages related to different products on your website, a specifically curated search engine is needed as all the products cannot be listed on the home page due to limited space.

The search engine queries made also help to keep logs of the most popular products for proper algorithm training.

Site Search also efficiently reduces the bounce rates for your website creating a positive effect on your website SEO.

An optimized site search on your website also helps in getting the site links search box in Google search results, increasing your organic website traffic.

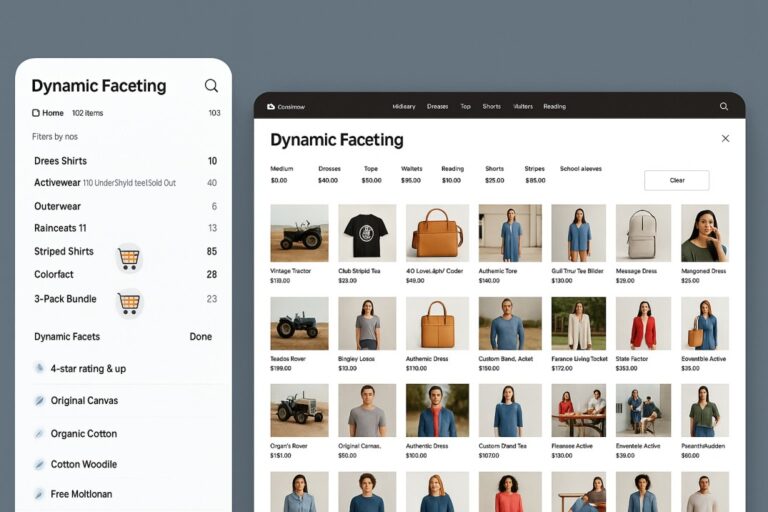

What is the difference between site search and navigation?

Site search works on the idea that the user already knows what they are looking for and hence can directly type a keyword to locate the product on the website.

Navigation, on the other hand, offers the user a list of different categories of products on their website catering to likely users, looking to explore the website with no particular product in mind.

Alternatives to Google Site Search

Google Site Search started as an easy and ad-free option for websites to add search functions to their websites for a monthly fee. This was discontinued in 2017, introducing Google Custom Search Engine.

It offers many benefits like it is free for basic features and it is simple and easy to control what pages to index from their own or other sites.

Its cons in the form of extra cost to block ads placed by Google AdSense are detrimental to the company’s image in ownership of the website.

There are alternatives to Google Site Search that help company websites have more control over the advertisement displays as well as the search results. These can be on-premise platforms as well as SaaS solutions with cloud hosting.

SaaS search solutions are the easiest of the lot and offer APIs to dictate search result displays on the website, indexing the content on the site and delivering search results automatically. Search infrastructure, abilities to run on multiple channels like mobile or web and also in different languages are beneficial.

Some providers in this range are Expertrec, SiteSearch 360, Swiftype and Algolia, Cludo, and Klevu for E-commerce.

Cloud Providers provide custom site search solutions with services hosted in the cloud. It can be a bit difficult to index to make the new fields appear in the search.

The big players in this space are CloudSearch(Amazon) and Azure Search (Microsoft).

Native Or Default Site Search includes the content management systems provided with the pre-packaged e-commerce solutions.

Examples are WordPress, Joomla, HubSpot with built-in functions for site search.

Internal Search Solutions on E-commerce Platforms

They do not require any hassle of installations as well as have no extra costs but they are not as highly efficient as a standalone solution custom-built for a website.

For example, Magento, Shopify, etc.

Open Source Solutions need a lot of effort for customizing according to the website and is made on the website’s server. The costs may be higher but fit perfectly like a garment specifically tailored for you.

Create a Site Search Engine for your Website

Here is how to create a site search engine for your website with ExpertRec

ExpertRec is a custom search engine provider tool that uses just your website’s URL to build a search engine for your website. The changes can be made from the ExtpertRec dashboard easily without any coding.

Also, the tool is free to try and automated. It’s a very easy process starting with you logging in and adding your nearest data center region or sitemap URL. This starts the crawling/indexing of pages and you can take a trial once it is done if there are changes to be made in the rankings, they are easy to accommodate.

Once, you are satisfied, it is an easy process to install the search on your website.

Site Search Best Practices

- Position the search bar in easy to locate manner.

- Placeholder Text gives the users visiting the website an idea of what all they can search on your website.

- Recent Searches act as a reminder to users of what they were looking for.

- Typeahead is an auto-fill feature for the product search.

- Images in the search bar corresponding to a particular product are a great option.

- Spell correct– lets the customer know if they typed something wrong.

- No results page should appear when you do not match what the user is searching for. Suggest similar products or alternatives on this page.

- Search Results Page the page appearing after the search query should be articulated to let people in on the vision of your brand.

- Mobile UI it is important to take into account how your website will look on the mobile browser as compared to on desktop or tablet.

- Site Search Tracking is a beneficial tool to analyze what the user statistics are for the different products on your website.

FAQs

1. What are some site search features and benefits?

There are many benefits and features of the in-site search. These are:

- It makes the search on the website efficient and makes it easier for the user to locate specific products leading to more conversions of visitors into customers.

- Businesses can make use of the statistics of the user searches to use in their marketing strategies.

- It is easier for businesses to make their content more discoverable even with many pages on the site.

2. How to choose the right internal site search?

- The functionality of the site search is important. It should be able to sort your pages, categorize and rank them and all this should be done with efficiency to reduce errors.

- It should be easy for your team to work on and develop. It should not have very complicated features making your experience daunting.

- It should be easy to mold the general framework into exactly what you need for your website.

- Pricing is a topic that is a little less important than the other factors but once you find competitors, you should look at the price and decide.

- The site search solutions should be secure and reliable.

3. How can I improve my internal Site Search engine?

Firstly, identifying site search as an important part of your website user experience and thorough research, what customizations are needed to make the user experience better are important steps.

Keeping a track of the statistics from the user searches and understanding user expectations are important for customizations to the search engine functionality.

4. What are the three types of Search Engines?

- Crawler-based: crawl the web searching for results also, they automatically detect the changes in the pages listed and do the indexing accordingly. For example, Google.

- Human-powered Directories: derive information from human sources and submissions. For example, an earlier version of Yahoo.

- Hybrid search engines: combine both crawler type and human-powered directory searches.

5. How to set up site search in Google Analytics?

To set up a site search on Google Analytics, sign in to your analytics account and decide the view in which you want the search to be set up. Click on view settings and then select different settings that match your agenda for website search. You can choose to turn the categories on and off and click save when you are done.

Create a Site Search Engine for your Website