What is a content based filtering recommendation engine?

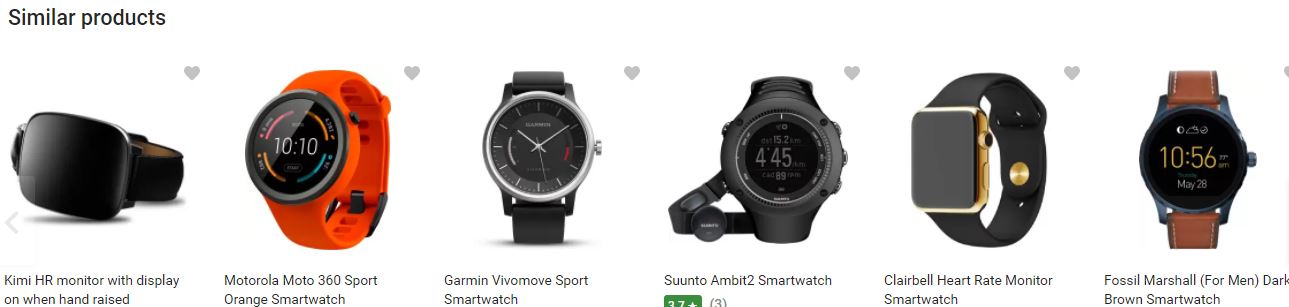

A recommendation engine picks up the best items out of a large number of items to display to a user. Content based recommender picks up these items based on the content/ characteristics of these items. It doesnt take into consideration users’ preferences/ ratings. You might have seen on many e-commerce sites recommendation such as similar products which are based on some similarity algorithm.

Why do businesses implement recommendation engines?

- To cut the time for product discovery.

- To boost sales by upselling, cross selling and bundling.

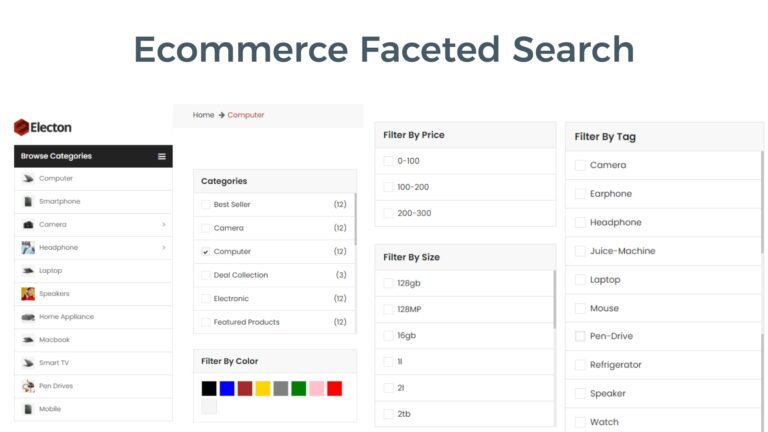

Content based recommenders, recommend items that are similar to the current item. They focus on properties of items. Similarity is determined by finding out how similar their properties are.

There first step is to have a item profile for all the items/ products in your inventory. For example – for an iphone, profile can be price, display size, RAM, storage capacity etc.

Item profiles can be easy to get in a few cases like mobile phones, in some other cases like images or articles, it is difficult to get.

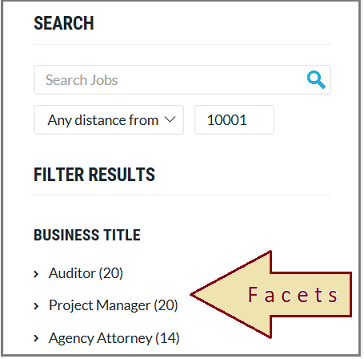

Here are commonly used content based filtering methods-

- 1.TF/IDF

- Neural Networks

- Clustering algorithms.

- Jaccard similarity

- Cosine similarity

The TF IDF method-

- Remove stop words- Example – and, the , of , but …

- Calculate TF.IDF score (term frequency multiplied by inverse document frequency) which gives the importance of a word.

Term frequency- Measures how frequently a term occurs in a document.

- TF(t) = (Number of times term t appears in a document) / (Total number of terms in the document)

- IDF(t) = log_e(Total number of documents / Number of documents with term t in it)

Example-

Lets assume that there are two documents and they contain the following words.

| Document 1 | Word | count |

| the | 2 | |

| apple | 1 | |

| tree | 3 | |

| Document 2 | Word | count |

| the | 1 | |

| orange | 2 | |

| tree | 1 |

Calculating Term frequency and inverse document frequency in d1 and d2

TF (“tree”, d1)= 3/6=0.5 TF (“tree”, d2)= 1/4=0.25

IDF(“tree”,D)=log(2/2)=0

TFT IDF(“tree”, d1)=0 TFT IDF(“tree”, d2)=0

TF (“apple”, d1)= 1/6=0.2 TF (“apple”, d2)=0/4=0

IDF(“apple”,D)=log(2/1)=0.3

TFT IDF(“apple”, d1)=0.2*0.3=.06 TFT IDF(“apple”, d2)=0*0.3=0

As you can see, the TF IDF scores are used in most of the text based recommender systems.

After calculating, TF IDF, to find out which items are close to each other , we can use multiple similarity algorithms such as cosine similarity, jaccard index etc.