If your marketing strategy still hinges on the same-old AB Test routine, split audience, swap one element, wait weeks for results, you’re likely missing major optimization opportunities. Classic A/B testing has been the bedrock of experimentation for years, but today’s fast-moving, data-rich digital landscape demands more than two-variant comparisons. In this deep dive, we’ll explore where traditional A/B tests fall short, and what modern approaches can offer instead.

The Limits of Classic AB Test Methods

Slow Feedback Cycles

As audiences grow fragmented across devices, regions, and segments, collecting meaningful statistical power takes longer. That delay slows decision-making and reduces agility, especially in highly dynamic markets.

Narrow Focus, Narrow Impact

Traditional A/B tests typically compare only one variable at a time. That siloed approach can overlook interactions between elements (e.g. headline + image) or ignore broader patterns in user behavior, limiting total learnings.

Oversimplification of Audience Behavior

Users differ in expectations and preferences. A variant that wins overall may underperform for high-value segments like repeat buyers or mobile users. Classic AB tests often miss these nuances.

Too Much Focus on Significance

A declared “winner” with marginal lift may not truly impact long‑term KPIs, especially if sample size is small or testing period misaligned with seasonality or campaign shifts.

What to Use Instead: Smarter Experimentation Strategies

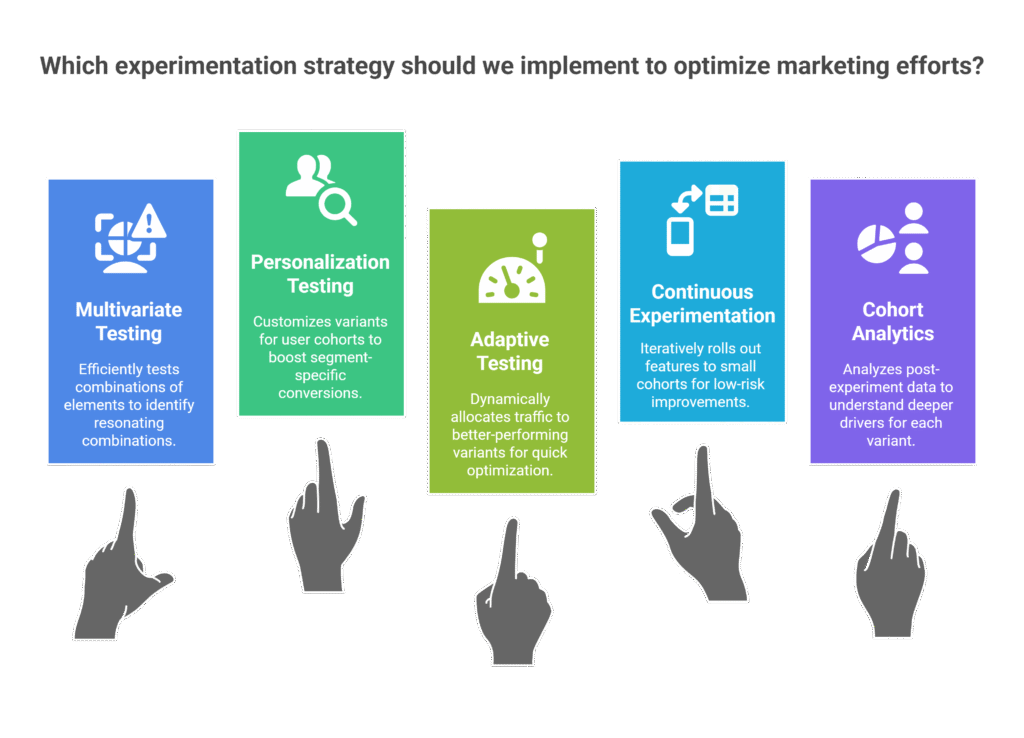

Multivariate (MVT) Testing

Test combinations of headline, layout, CTA, image, copy, simultaneously and efficiently. You gain insights on which combinations truly resonate, not just isolated elements.

Personalization and Segmentation Testing

Serve customized variants to defined user cohorts (e.g., new vs returning, geography, device). Test against a baseline to uncover what resonates per segment and boost conversions where it matters most.

Adaptive and Multi-Armed Bandit Testing

Rather than fixed 50/50 splits, these algorithms dynamically allocate traffic toward better-performing variants in real time, optimizing more quickly and reducing wasted exposure to underperformers.

Continuous Experimentation & Feature Flagging

Build an experimentation culture where new features, messaging, or UI tweaks roll out to small cohorts first—and adjust as data arrives. This keeps product and marketing improvements iterative and low risk.

Insights-Driven Cohort Analytics

Analyze post-experiment data across cohorts to understand deeper drivers, like lifetime value, retention, or churn—for each variant.

The Hidden Costs of Sticking to Classic AB Test

- Opportunity Cost of Watered-Down Wins

Small lifts repeated across many narrow tests can erode strategic momentum. - Undiscovered Lift from Interactions

When elements interact, like a headline working best with a specific image, you miss out if you test them separately. - Segment-Level Misses

If a variant wins overall but fails for a high-value group, you lose big-picture value. - Resource Drain

Constant cycle of A/B tests can strain time, tools, and team bandwidth, without always delivering proportionate returns.

How to Transition: Best Practices

- Audit Your Experiment Portfolio

Review recent AB tests. Identify where results were modest or narrowly scoped. - Prioritize High-Impact Areas

Focus on pages, features, or messaging tied to high traffic or revenue. - Plan MVT or Personalization Scaffolding

Define which elements you want to test together, copy, layout, user segment, and prepare variants accordingly. - Adopt Smarter Testing Tools

Tools with built-in bandit allocation, personalization engines, and cohort analytics accelerate smarter experimentation. - Measure Beyond Immediate Lifts

Complement conversion metrics with retention, LTV, average order value, and segmentation performance.

ExpertRec’s Role in Smarter Experimentation

- Provides personalization-driven variants based on user behavior and intent

- Enables multivariate testing across copy, layout, and product recommendations

- Supports dynamic traffic allocation (bandit-style optimization) rather than fixed splits

- Delivers cohort-level analytics, measuring performance by user segment, revenue impact, repeat visits

Conclusion

If you’re still relying on classic AB Test setups to drive decisions, you’re likely leaving growth on the table. Modern experimentation offers richer insights, faster optimization, and far better alignment with segmented, personalization-first strategies. Evolve from slow, narrow split tests to smarter, multi-variant, and adaptive methodologies, and unlock the real potential in your digital experiences.

FAQs

1. What’s the difference between A/B and multivariate testing?

A/B tests one element at a time. Multivariate tests multiple elements together to show how combinations perform relative to each other.

2. Can multivariate testing work with limited traffic?

MVT requires more traffic than basic A/B. If traffic is low, smart segmentation or bandit testing may be more effective.

3. How do bandit algorithms reduce experimentation time?

They continuously shift traffic toward better-performing variants, quickly phasing out losers and accelerating learning.

4. Is personalization testing still randomized?

Yes, but traffic is split across segments and variants in a controlled way, often with different weights or logic applied per cohort.

5. What metrics should I track beyond lift in conversion rates?

Look at retention, cohort LTV, average order value, and revenue per user. These give a fuller picture of experiment impact.