Autocomplete is one of the most potent tools in eCommerce: it speeds up search, steers customers toward relevant products, and reduces friction. Yet without testing, guesswork can easily lead to distraction, redundancy, or missed revenue. In this in-depth article, we explore five autocomplete optimizations validated through A/B testing and explain why each matters for performance and user experience.

Pre‑Merchandising vs Clean Input

Some sites pre-populate the autocomplete widget with trending terms or promotional suggestions before a user types. But this can be distracting if a user has a specific query in mind. A/B tests show, visitors in search mode prefer a clean, empty widget that respects task-focused intent, leading to higher click-through and conversion rates.

Diverse Content Mix in Suggestions

Autocomplete widgets often include mixed content, query suggestions, product hits, category links, even banners. A/B testing different layouts confirms that mixing brand names, categories, and products improves discovery, but overloading the UI can reduce clarity. Optimal layouts deliver a curated mix tailored to user context and intent.

Semantic Deduplication to Enhance Diversity

A recent study introduced semantic de‑boosting: suppressing semantically redundant suggestions in autocomplete using embedding similarity. A/B testing showed this increases diversity of suggestions, improves add-to-cart rates, and reduces null search sessions, users find new, distinct options efficiently.

Personalized Completion Based on User Context

Embedding-based personalization—using a user’s recent queries or session behavior—can lift autocomplete performance significantly. In the eBay use case, adding context embedding to ranking improved retrieval metrics by 20‑30% and online performance by up to 10% for active sessions. A/B tests validate that contextual personalization leads to higher relevance and conversion.

UI Element and Timing Tweaks

Autocomplete timing (when suggestions appear) and UI styling (hover behavior, color, spacing) hugely impact engagement. A/B testing which element behavior works best—such as displaying suggestions after two characters vs three, or separating product vs query suggestions visually—can improve CTR and reduce bounce.

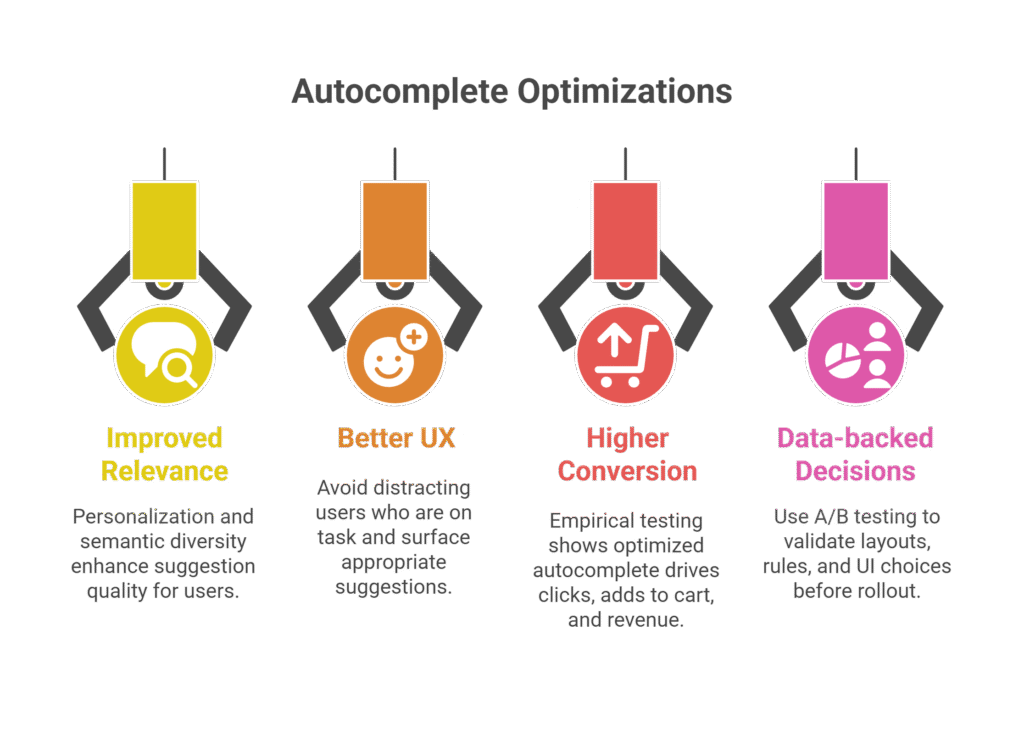

Why These Autocomplete Optimizations Matter

- Improved Relevance: Personalization and semantic diversity enhance suggestion quality.

- Better UX: Avoid distracting users who are on task and surface appropriate suggestions.

- Higher Conversion: Empirical testing shows optimized autocomplete drives clicks, adds to cart, and revenue.

- Data-backed Decisions: Use A/B testing to validate layouts, rules, and UI choices before rollout.

ExpertRec’s Autocomplete Features

- Supports personalized autocomplete ranking using session context and behavioral data

- Enables semantic de‑duplication to enhance variety in suggestion lists

- Offers configurable widget templates, mixing query, category, and product suggestions

- Provides timing and UI controls (character thresholds, layout variants) for fine-tuning

- Tracks impact metrics like suggestion CTR, add‑to‑cart rate, and zero-result visits for A/B analysis

Conclusion

Autocomplete is powerful, and small adjustments uncovered via A/B testing can make a big difference. From when suggestions appear and how diversity is managed, to embedding-based personalization and interface tweaks, each optimization improves relevance, usability, and revenue. By testing systematically and iterating smartly, you can transform autocomplete from a convenience feature into a conversion engine.

FAQs

1. What is A/B testing for autocomplete?

It’s the process of comparing variants of autocomplete behavior or layout to measure performance differences in metrics like clicks, search success, and conversion.

2. Why test pre‑merchandising suggestions?

Because for many users who know what they’re searching for, preloaded trending suggestions can distract or mislead, harming task-focused browsing.

3. How does semantic de‑duplication enhance search?

It filters out redundant or very similar suggestions—using embedding similarity—to increase diversity and surface unique query choices.

4. What personalization helps autocomplete perform better?

Context-based personalization, e.g. using recent queries or browsing behavior to influence suggestion ranking, drives relevance for returning or exploring users.

5. How quickly can autocomplete A/B test deliver actionable insights?

With sufficient traffic, meaningful variance (e.g. click-through lift) can emerge in days—making autocomplete one of the faster features to test effectively.