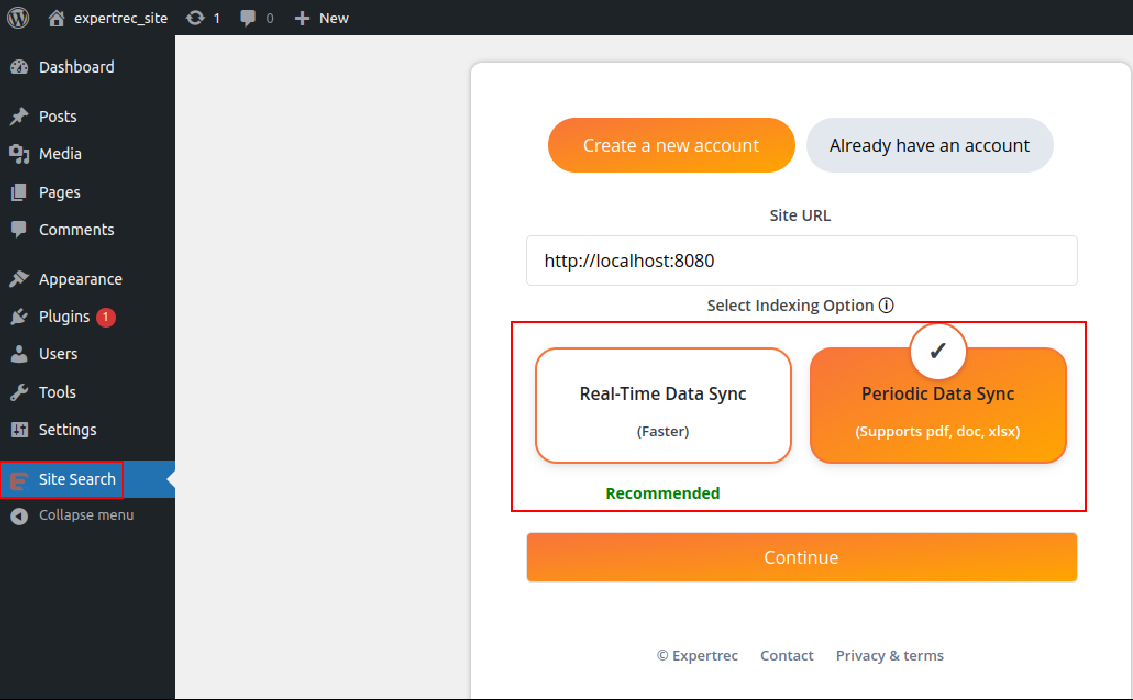

To display search results, a search index should be built and your site’s data will be indexed in it. When you update posts/pages, ExpertRec will make sure changes will be reflected in search results at the earliest. For the WordPress plugins, we provide two ways of integration: Real-Time Data Sync and Periodic Data Sync.

What’s the best way to do one or the other? Which way is more suitable for your business? Scroll through the article to find out more about which way to adopt your WordPress plugin.

Once you install the WordPress plugin, you will see the following screen.

You will see two options here: Real-Time vs Periodic Data Sync

Real-Time Data Sync: In this case, WordPress decides which posts/pages should be sent to index and update search results accordingly. Please note, data residing in the WordPress DB will be considered for indexing.

- If you have an e-commerce (WooCommerce) site, this works very well

- This is faster in reflecting changes in search results

- Post/Page descriptions (Content, Title, Images) all are taken from the DB

- Works with all WordPress sites

- If your website is about B2B networking, one should go with this approach.

- If your site is in development environments (staging builds or localhost development ), this way of integration is recommended.

Having said above, when updates are critical to your search results ( like price changes, availability/stock changes), we recommend this approach.

Periodic Data Sync: In this case, ExpertRec crawler decides which posts/pages should be added to the index and updates search results accordingly. Data for each post/page will be taken by loading each post/page in the browser and extracted from HTML code.

- This is comparatively slower in reflecting changes in the search results.

- Posts/Pages are fetched by the crawler as on changes and the search index is kept updated periodically.

- Works with all WordPress sites but we do not recommend this for WooCommerce sites.

- Since ExpertRec Crawler is going to access posts/pages from outside (Internet), localhost builds or staging environments sites will not be able to use this approach to the full extent. In such development sites cases, some of the crawler settings would need updates.

- If you want to search multiple sites, and want a single search bar that displays results from all of them, this is recommended way.

- If you want to include SEO parameters of websites to affect search results, this option outperforms earlier Real Time Data Sync integration.

- If your site contains a lot of pdfs, docs, excel files, and other documents, you can use this option. Searching across such a wide range of document types outperforms the Real Time Data Sync option.

Having said above, when updates to your sites are less frequent, then this approach is useful. For library sites, travel guiding, or blogging sites this approach will work. Real-time synchronization is not possible. You can schedule a crawl for daily, weekly, or monthly intervals, or you can manually start a re-crawl whenever you wish.

Crawling is the process of finding new pages and links to more new pages by finding and following links on a page to new pages, as well as continuing to find and follow links on new pages to other new pages. Indexing is the process of storing, evaluating, and arranging content and page relationships. Parts of indexing are used to guide how a search engine crawls. In a nutshell, website indexing is the process through which search engines understand the function of your website and each page on it. It assists Google/Bing in finding your website, indexing it, associating each page with searched topics, returning it to search engine results pages (SERPs), and ultimately driving the right visitors to your content. An indexed website aids in the appearance of a site or page in search engine results, which is often the initial step toward ranking and visitor generation.

A search engine’s crawling of the Internet is a continuous operation. It never truly comes to an end. It’s critical for search engines to locate new pages that have been published, as well as modifications to old pages. They don’t want to squander time and money on pages that aren’t solid search result candidates. And a web crawler is a piece of software that follows all of the links on a page that lead to other sites and repeats the process until it runs out of new links or pages to crawl.

Web crawlers go by a variety of names, including robots, spiders, search engine bots, and just “bots” for short. They’re called robots because they have a certain task to complete, travel from link to link, and collect data from each website.

The following pages are crawled first:

Well-liked (linked to often), excellent quality, and websites that are frequently updated and produce new, high-quality information are given higher importance. Hence websites as such can use a crawl way.

Crawling and indexing are critical for your website’s success. It does not mistakenly prohibit Google from accessing your website and they also Examine and correct any problems on your

website. Hence, from the aforementioned data, one can use whichever way best suits their requirement, depending on the nature of the business, website configuration, and the services (B2B,B2C,C2C,C2B) they offer.