Sitemap:

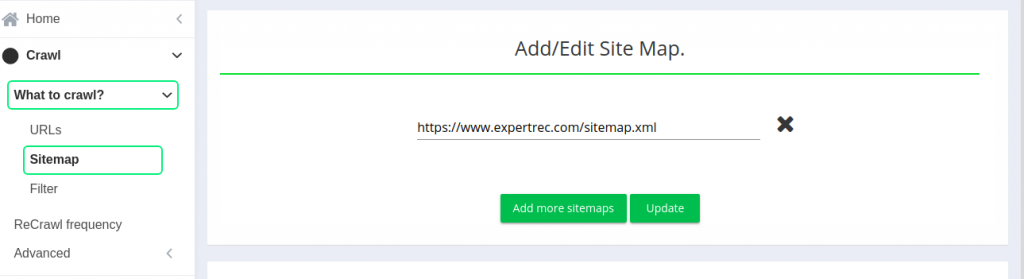

sitemap is a more reliable way to guide the crawler to crawl pages which are important. Generally website admins will create sitemap for your site and make it available as direct link into robots.txt. Updating sitemap to expertrec is shown below.

In order to add another sitemap url, click “Add more sitemaps” button and follow the same url rules for adding new sitemap urls. Just don’t forget to update your sitemap whenever you create new pages or posts otherwise those web documents will not come to search results.

Rules for sitemaps:

- Localhost(127.0.0.1) urls : If you have a site running in your local system, then it will not be accessible by internet. So sitemap of such urls will never be accessed by Expertrec crawler and will be discarded.

- Sitemap of staging site: Staging site which is clone of your production site is meant for internal use only. Building search index on top of staging site is not useful so its recommended to not put sitemap of staging sites.

- Intranet website sitemap: Intranet websites are available inside your enterprise network only. Sitemap of such a unreachable website will not be considered by Expertrec crawler.

- Absolute Urls inside sitemap: Urls inside sitemap should not be partial. It is recommended to put absolute urls into the sitemap instead of just putting the relative path.

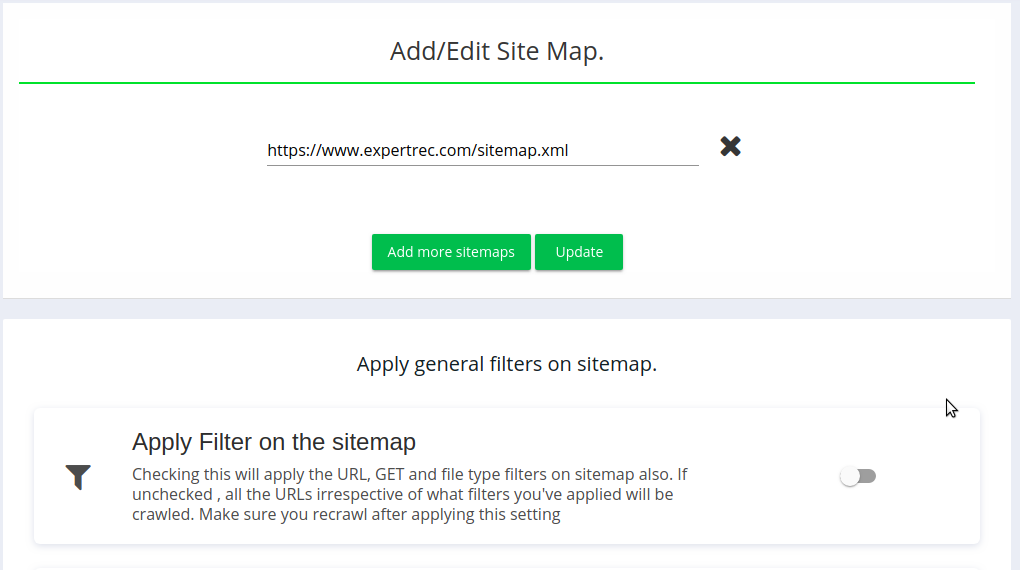

Expertrec crawler allows you to control urls in sitemap section via below options:

- Apply filter on Sitemap

- crawl only Sitemap

1. Apply filter on Sitemap:

By default urls present in sitemap will be considered for crawling. If you need a filter on top of urls present inside sitemap, this is an option for you. #filter shows different ways to filter out or consider urls.

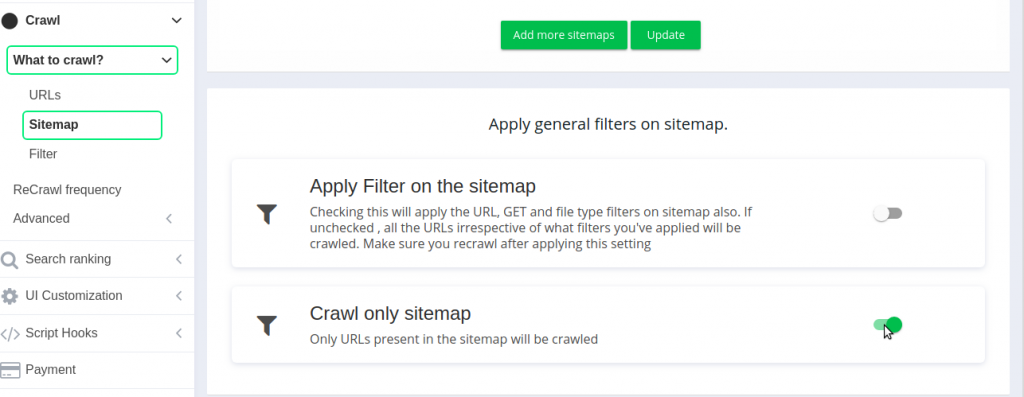

2. Crawl only sitemap

If you want the crawler to stick to whatever urls present in the sitemap, expertrec crawler can be instructed via this option. On enabling this option, crawler will discard all newly discovered urls and will keep the crawling list consisting of urls mentioned in sitemap urls. If your sitemap is having all links to expected web documents, then enable this option.